It's been a while since the last one of these, but now as we're celebrating

midsummer and nightless nights here in Finland, I think its time for me to sit

down and write a bit of a catch-up post about what's going on with MUTA, the

Multi-User Timewasting Activity.

Officially developing an MMORPG solo

To recap, MUTA is a free and open source MMOPRG project started in 2017 as a

student project. The idea for it came from myself and another Kajaani UAS

student, Lommi, as we had both been talking of writing an MMO for a while. In

fact, wanting to write an MMO was the primary reason I personally applied to

the school's game development programme.

We spent two full project courses, each 2-3 months long, on writing the game.

During that time we got much assistance from many different people. Game art

students created art, and programming students helped us with some great tools

and certain parts of the engine, and production students helped us get

organized.

MUTA after 2 months of development, during a test with around 20

people online. The 64x64 art at this point was created by three Singaporean

summer school students.

After (and between) said courses, it was just me and Lommi working on the

game. I'm more of an engine guy, he's more of a gameplay guy, though neither

of us is one thing exclusively. While it initially only took us two

and a half months to get an OK-looking demo game running, writing an MMO

engine and toolset properly takes a lot of time. And while such tools are in

development, content creation is difficult, if not impossible. The lack of

visible progress during a technology development phase such as this, I think,

is problematic for people who are primarily driven by gameplay and

visuals, and so after a while, I was mostly working on the game alone, always

telling my friend I would try to get the engine and tools ready for

content-development ASAP.

Last month, Lommi finally told me he felt the project was too big for him to

work on it on the side at this point (he's also employed full-time and has

been for quite a while). That leaves me as the only official

developer of MUTA. But that's not such a big change after all: likely more

than 90% of the current codebase was already written by me alone at this

point, as Lommi has not really been involved during the last year or more.

In fact, it's a bit of a relief for me. I no longer need to worry about

getting the game ready for others to develop content for it. I'm working a

full-time job and try to spend the time I can on MUTA, but often time is

simply hard to come by.

With this organizational shift in mind, I have some more changes coming up.

I'm planning on reworking the theme of the game and possibly making the art

myself. I'm a terrible artist, so it will probably come to simple indie pixel

crap. But that's alright, the gameplay is the important part. As for the

theme, I'm planning on simple high fantasy, due to the fact I'm not good

enough of a visual artist to present a universe anything like what we

originally planned for - the original idea was a sword and sorcery -type world

inspired by the works of Robert E. Howard. I'm of the opinion that the theme

should support the gameplay and not the other way around. However, I don't

want to see fireballs flying all over in the style of Warcraft either - it's

gonna be something lower key than that.

Character "art" from my first-ever game project, Isogen.

For now, MUTA remains a hobby project I try to pour much of my free time into.

Time will tell what it actually evolves into, but I've got high hopes that one

day it will be a real, online MMORPG. If not that, at least the code will be

available for anyone to inspect.

Code changes

Phew, it's been many months since I last wrote about MUTA, so a lot of things

have changed in the codebase, and some of them I don't even remember anymore.

Some of the changes include (in a semi-chronological order):

- Reworking of the immediate mode GUI into a standalone library.

- Shared code cleanup (mostly just renaming things and organizing them into files)

- Moving to MojoAL from OpenAL Soft on at least GNU/Linux. I don't know

how great of an idea this is, but MojoAL is easy to embed into the project,

having only two source files as opposed to OpenAL Soft's CMake hell.

- New entity system for the client.

- Rewriting the client's rendering.

- Completely rewriting the master server.

- Writing a world database server.

- Writing a new async database API.

- packetwriter2 tool for network message serialization.

- Shared API and authentication for server-server connections (svchan_server and svchan_client).

- New generic hashtable written as a separate library.

Client entity system

The entity system on the client needed a rework. It was something of an

entity-component system (and I know how pretentious that term is) and remains

so. This job had two distinct motivations:

- Making the code clearer

- Performance

I feel like both goals were achieved. First of all, the code needed breaking

into more source files as previously the whole world code was in a single file

(I feel this sort of isolation is more future-proof for this project), but

second, I really wanted less weird macros and more flexibility in component

implementation. To recap the new system:

- An entity has a fixed-size array of components.

- Each component has an enumerator it's referred with. The enum is an

index to an entity's component array.

- Components in an entity generally point to a handler structure. The

component might have an iterable element in a tight array associated with

it, but this is not visible to the component's user - they access it

through a set of functions.

- Components communicate mainly through events (callbacks).

Components are defined by creating an instance of a static-duration

component_definition_t struct.

struct component_definition_t {

int (*system_init)(world_t *world, uint32 num_components);

void (*system_destroy)(world_t *world);

void (*system_update)(world_t *world, float dt);

component_handle_t (*component_attach)(entity_t *entity);

void (*component_detach)(component_handle_t handle);

entity_event_interest_t *entity_event_interests;

uint32 num_entity_event_interests;

component_event_interest_t *component_event_interests;

uint32 num_component_event_interests;

uint32 index; /* Autofilled, initialize to 0! */

component_event_callback_t *component_event_callbacks; /* Autofilled, initialize to 0! */

};

So the component_definition_t structure is really just a set of callbacks.

Components are also pooled, but the pools are members of world instances,

hence not visible int he above example (the functions just accept a pointer to

a world_t, as seen).

Using a component definition, components can be added to an entity and then

manipulated.

component_handle_t entity_attach_component(entity_t *entity,

component_definition_t *component_definition);

The component handle returned by entity_attach_component can be used to access

the component. It could be laid out in memory in various ways - the API does

not set restrictions on this, except that the handle must be a constant

address pointer until destruction.

void mobility_component_set_speed(component_handle_t handle, float speed);

Component event callbacks are attached to the component definitions rather

than individual components. This does away with some flexibility, but saves

memory and likely performs better in the average case, since in MUTA, certain

sets of components in a single entity type are very common (creatures have a

certain set of components, players another, etc.) The callbacks get called

mostly immediately when a component fires an event. An example use case of

events would be animations: when the mobility component fires a "start move"

event, the event can trigger the animation component to start playing a

different animation.

Rewriting the client's rendering

This one's a pretty simple one. Tile depth sorting was moved to the GPU, and

with the new entity system, entity rendering was also changed.

Previously, the world rendering system walked through each rendering component

in the world every frame, looked up the entity's position from a separate

memory address, then decided whether to cull it or not, and so on. In the new

system, positions are cached in more CPU cache-friendly structures. For

example, if a entity moves, an event is fired to it's rendering component, and

the rendering component logic culls the entity and caches its position in an

array of render commands. The array of render commands is iterated through

every frame to draw all visible entities - render commands contain all the

necessary data to place the entity's sprites properly on the screen.

Master server rewrite

The master server is the authoritative part of a single MUTA shard/world.

It knows all the entities in the world and generates unique IDs for

everything. Multiple simulation servers connect to the master server,

each one of them simulating different parts of the world map.

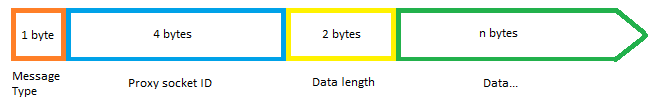

While writing MUTA's proxy server (of which I also wrote my bachelor thesis),

I feel like I finally "got" how I want to do multithreading with servers:

handle all state on one thread, have other threads post events to that thread.

The event loop works with a wait function akin to poll. Basically, an

event-based approach.

Since then, I've been wanting to rewrite the rest of MUTA's server

applications to use a similar architecture. To explain a little, the below

table displays the programs that make up the server side software.

| Program | Directory name in repo | Event-based? |

|---|

| Master | server | No |

| Simulation Server | worldd | No |

| Login Server | login-server | Yes |

| Proxy Server | proxy | Yes |

| Old database server | db-server | No |

| World database server | world_db (server_rewrite branch) | Yes |

Don't worry about the discrepansies in subproject naming conventions, I do

have a plan for them now, believe it or not. It's just that the plan keeps on

changing...

For the master server, an architecture change means that the main loop will no

longer only run at a fixed rate: it will also be able to respond to events

immediately, using blocking event queues. This is achieved with a structure

akin to the pseudo-code example below.

int target_delta = 17; /* Milliseconds */

int last_tick = time_now();

int time_to_wait = target_delta;

for (;;) {

event_t events[64];

int num_events = event_wait(events, 64, time_to_wait);

for (int i = 0; i < num_events; ++i)

_handle_event(&events[i]);

int delta_time = time_now() - last_tick;

if (delta_time < target_delta) {

time_to_wait = target_delta - delta_time;

continue;

}

update(delta_time);

}

Changing the architecture has meant a rather large amount of refactoring, as

it affects nearly all systems on the master server. Since this has largely

meant a complete rewrite, I have taken to also rewriting some of the systems

into a mold I feel is better suited for the future. For example, the

world/entity system that's used to control player characters, creatures and

other game objects, is being completely written from scratch in the

server_rewrite Git branch. The world API contains functions such as the

ones below.

uint32 world_spawn_player(uint32 instance_id, client_t *client,

player_guid_t id, const char *name, int race, int sex, int position[3], int direction);

void world_despawn_player(uint32 player_index);

int world_player_find_path(uint32 player_index, int x, int y, int z);

Calls such as the ones above are asynchronous in nature, as they involve the

simulation server instances connected to the master server. Hence, I've been

thinking of reworking them in such a way that they would accept a callback,

"on_request_finished" (or whatever). That would be alright for code clarity,

but then, that would involve some memory overhead. The alternative is to

handle finished requests inside the world API itself, meaning it will have to

call back to some other API's that called it. You know, I'm constantly

pondering where the line of abstraction should lie: tight coupling isn't

pretty, but abstraction often comes at a great programmatic resource cost. In

the above case, there's little reason to create a datastructure for saving

callbacks and their user data (void pointers) if there's really only one

logical path the code can take when a response arrives. I try not to fall down

the trap of "OOP" and "design patterns" just for the sake of such silly

things, but at the same time, sometimes I have an engineer's urge to

overengineer things. Usually I end up with the more practical, less

abstraction-based approach. After all, I know every dark corner of my own

program, or so I at the very least believe.

The rewrite has taken about two months now and I think it will still take some

more time, partly because at the same time I must make changes to other

programs in the server side stack as well. At the same time, new programs are

coming in, such as the world database server. It will be interesting to see how

things will work out when the server starts up again for the first time...

Well, maybe frustrating is a more appropriate word.

World database server

The world database server is a new introduction to the server side stack.

Previously, MUTA had a "db-server" application, but there was no separation

between individual world/shard databases and account databases - now, that

separation is coming.

The WDB is an intermediate program in between the MySQL server and the

MUTA master server. It's sole purpose is to serve requests by master servers

through a binary protocol while caching relevant results. The intention is

that this is the de-facto way to access a single shard's database.

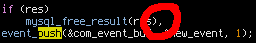

There's an asynchoronous API associated with the WDB. It's consists of a set

of functions, each one of which performs a specific query. The query functions

also take in callbacks as parameters - the callbacks are called when the query

completes or fails.

wdbc_query_id_t wdbc_query_player_character_list(

void (*on_complete)(wdbc_query_id_t query_id, void *user_data, int error,

wdbc_player_character_t *characters, uint32 num_characters),

void *user_data, account_guid_t account_id);

Not much else to say about it right now... It's event-based like the rest of

the newer applications on the server side. Will keep working on it!

packetwriter2

Back when the MUTA project was started, Lommi wrote an application called

MUTA_Packetwriter for network packet serialization. It compiles C code,

structs and serialization functions, from a simple file format where the

fields of each network packet are defined.

Lommi's tool has saved us countless of hours of writing arbitrary code and

debugging it, but now that he is no longer working on the project, and there

are many new packets making their way into the protocol with new applications

such as the world database coming, I've deemed it necessary to write a new

version of this program.

packetwriter2 will use a new, simpler file format, the ".def" format used in

many of MUTA's data files. It will support features I've wanted for a long

time, such as arrays of structs and nested structs. I've started writing the

parser, and below is an example of the file format.

include: types.h

include: common_defs.h

group: twdbmsg

first_opcode = 0

group: fwdbmsg

first_opcode = 0

struct: wdbmsg_player_character_t

query_id = uint32

id = uint64

name = int8{MIN_CHARACTER_NAME_LEN, MAX_CHARACTER_NAME_LEN}

race = int (0 - 255)

sex = int (0 - 1)

instance_id = uint32

x = int32

y = int32

z = int8

packet: fwdbmsg_reply_query_player_character_list

__group = fwdbmsg

query_id = uint32

characters = wdbmsg_player_character_t{MAX_CHARACTERS_PER_ACC}

Along the way, I think the encryption scheme needs a rework, too. Not the

basic algorithms behind it (MUTA uses libsodium for that), but the fact that

currently, messages are encrypted on a per-message-type basis. Being able to

turn encryption on and off in the stream would save bandwith and improve

performance, as multiple messages could be encrypted in a single set.

Keepin' busy

Honestly, I've been having a tough time scraping up enough time to work on

MUTA after starting my current job. Also, turns out motivating myself is

difficult if I don't constantly have something to prove to someone (you know,

like progress reports to people you know in real life and stuff). Guess I

should go and get one of those productivity self-help books soon.